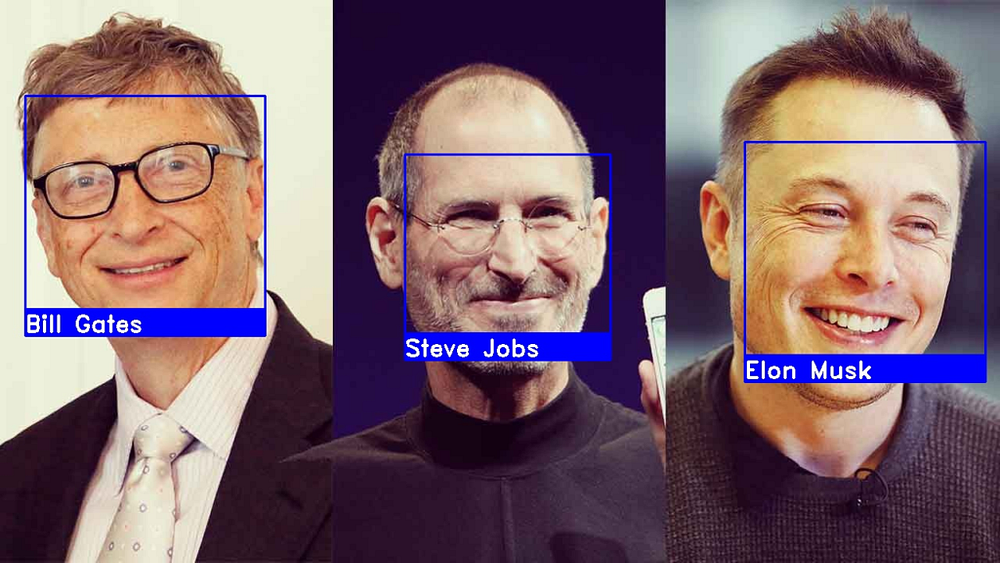

Facial Image Prediction Using TensorFlow and Resnet

INTRODUCTION:

Residual Networks, or ResNets, learn residual functions with reference to the layer inputs, instead of learning unreferenced functions. Instead of hoping each few stacked layers directly fit a desired underlying mapping, residual nets let these layers fit a residual mapping.

CODE 😃👇:

import numpy as np

import cv2

import os

from tensorflow.keras.applications import ResNet50

from tensorflow.keras.models import Model, load_model

from tensorflow.keras.layers import Dense, GlobalAveragePooling2D

from tensorflow.keras.preprocessing.image import ImageDataGenerator

from tensorflow.keras.optimizers import Adam

from tensorflow.keras.utils import to_categorical

from sklearn.model_selection import train_test_split

# Step 1: Load and preprocess the dataset

def load_dataset(data_path):

images = []

labels = []

label_map = {}

label_counter = 0

for label_name in os.listdir(data_path):

label_dir = os.path.join(data_path, label_name)

if os.path.isdir(label_dir):

label_map[label_counter] = label_name

print(f”Processing label: {label_name} ({label_counter})”)

# Debugging line

for image_name in os.listdir(label_dir):

img_path = os.path.join(label_dir, image_name)

img = cv2.imread(img_path)

# Check if the image is read properly

if img is None:

print(f”Warning: Skipping unreadable image {img_path}”)

continue

# Resize the image for ResNet50

img = cv2.resize(img, (224, 224))

images.append(img)

labels.append(label_counter)

label_counter += 1

# Check if images list is empty

if len(images) == 0:

raise ValueError(“No images found in the dataset. Please check the dataset directory.”)

images = np.array(images, dtype=’float32') / 255.0

labels = to_categorical(labels)

return images, labels, label_map

# Step 2: Build ResNet50 model

def build_resnet50_model(input_shape, num_classes):

base_model = ResNet50(weights=’imagenet’, include_top=False, input_shape=input_shape)

base_model.trainable = False # Freeze the base model

x = base_model.output

x = GlobalAveragePooling2D()(x)

x = Dense(1024, activation=’relu’)(x)

predictions = Dense(num_classes, activation=’softmax’)(x)

model = Model(inputs=base_model.input, outputs=predictions)

return model

# Step 3: Train the model

data_path = ‘/path/to/your/dataset’ # Update this to your dataset directory

X, y, label_map = load_dataset(data_path)

# Split the data manually

X_train, X_val, y_train, y_val = train_test_split(X, y, test_size=0.2, random_state=42)

# Create the ImageDataGenerators

datagen_train = ImageDataGenerator(rotation_range=20, width_shift_range=0.2, height_shift_range=0.2, horizontal_flip=True)

datagen_train.fit(X_train)

datagen_val = ImageDataGenerator() # No augmentation for validation

datagen_val.fit(X_val)

# Create and compile the model

model = build_resnet50_model(input_shape=(224, 224, 3), num_classes=len(label_map))

model.compile(optimizer=Adam(learning_rate=0.0001), loss=’categorical_crossentropy’, metrics=[‘accuracy’])

# Train the model

history = model.fit(

datagen_train.flow(X_train, y_train, batch_size=32),

epochs=10,

validation_data=datagen_val.flow(X_val, y_val, batch_size=32)

)

# Step 4: Evaluate the model

test_loss, test_acc = model.evaluate(X_val, y_val)

print(f”Validation Accuracy: {test_acc:.2f}”)

# Step 5: Save the model

model.save(‘face_recognition_resnet50.h5’)

# Step 6: Test on a single image (optional)

def recognize_face(model, image_path, label_map):

img = cv2.imread(image_path)

img = cv2.resize(img, (224, 224))

img = np.expand_dims(img, axis=0) / 255.0

prediction = model.predict(img)

label = np.argmax(prediction)

print(f”Predicted label: {label_map[label]}”)

# Example usage

recognize_face(load_model(‘face_recognition_resnet50.h5’), ‘/path/to/test/image.jpg’, label_map)

.jpg)

No comments:

Post a Comment